Google I/O 2025 was huge for AI – here's everything announced

The company's developer conference is starting soon, and we're covering all the biggest announcements live

📅 Google is hosting its annual I/O developer conference today

🤖 We expect to hear plenty about Gemini, AI, Android 16, Android XR, and more

👀 There’s always a chance Google has some surprises in store

🧑💻 The Shortcut is tracking all of the updates in this liveblog

🚨 The event begins at 10 a.m. PT / 1 p.m. ET

2:56 PM ET: And that’s a wrap! Thanks for following along with my coverage of Google I/O 2025. Be sure to subscribe to The Shortcut for coverage like this in the future.

2:52 PM ET: Lol.

2:51 PM ET: Google got Gentle Monster and Warby Parker to commit to developing glasses with Android XR built into them.

2:50 PM ET: Live translation between Android XR glasses seems insane. Demo wasn’t great but the concept is there.

2:45 PM ET: These glasses seem really advanced. They can even play videos on the miniature display.

2:44 PM ET: Google is announcing new smart glasses powered by Android XR with Gemini integration, cameras, microphones, speakers, and a mini display. They’re very reminiscent of Meta’s Ray-Ban glasses.

2:42 PM ET: Yeah, I could get used to watching sports like this.

2:40 PM ET: Google just touched on Android 16 a bit (which you can read more about here). Now, we’re moving onto Android XR…

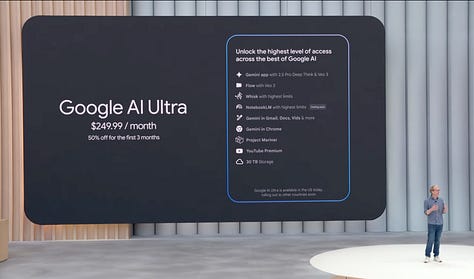

2:37 PM ET: Google is rolling out two new plans for AI: Google AI Pro and Google AI Ultra. The former is $19.99/month and the later is (drum roll please) $249.99/month. One is strictly for consumers, the other is for the most enthusiastic AI users on the globe. Here’s a breakdown of what you get.

2:35 PM ET: Using AI in art is one of the hardest cases to make, and Google seems to be doing a decent job in pitching it as an extra utility in your utility belt. However, it’s also proving you can straight-up make movies with AI, which… ew.

2:30 PM ET: Google has been showing off some of its AI generation models like Lyria and Veo. The company is trying to make the case for artists to lean on AI more often to help tell stories, get inspiration, and more.

2:24 PM ET: Big upgrades for Gemini at I/O 2025.

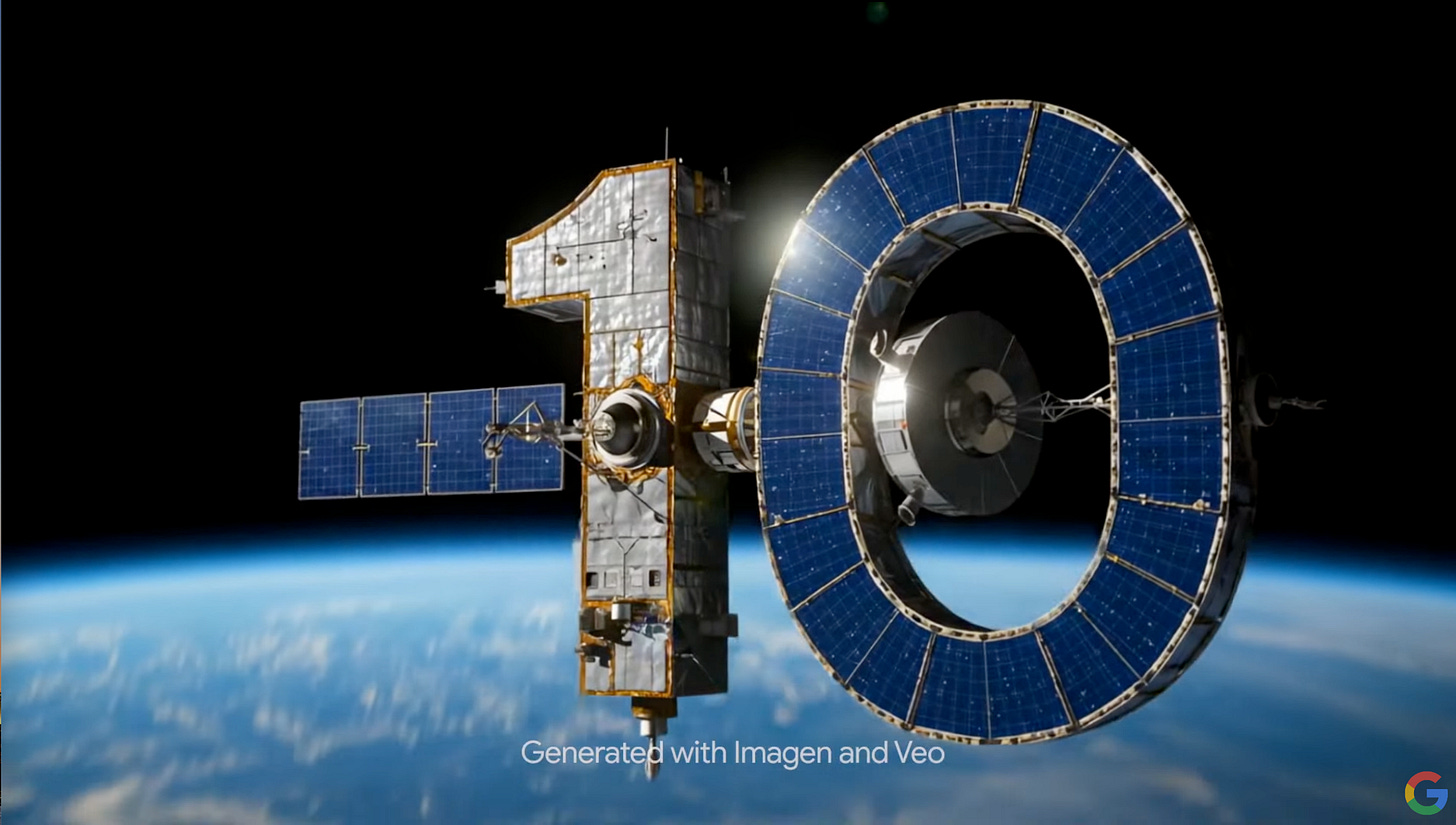

2:21 PM ET: Google is also rolling out Veo 3, a new video generation model that comes with native audio generation that can create sound effects, dialog, scores, and more. It’s being released today. It looks absolutely insane.

2:20 PM ET: Google is introducing Imagen 4, a new image generation model that’s better at handling text, shadows, textures, and more. It’s 10x faster than the previous model.

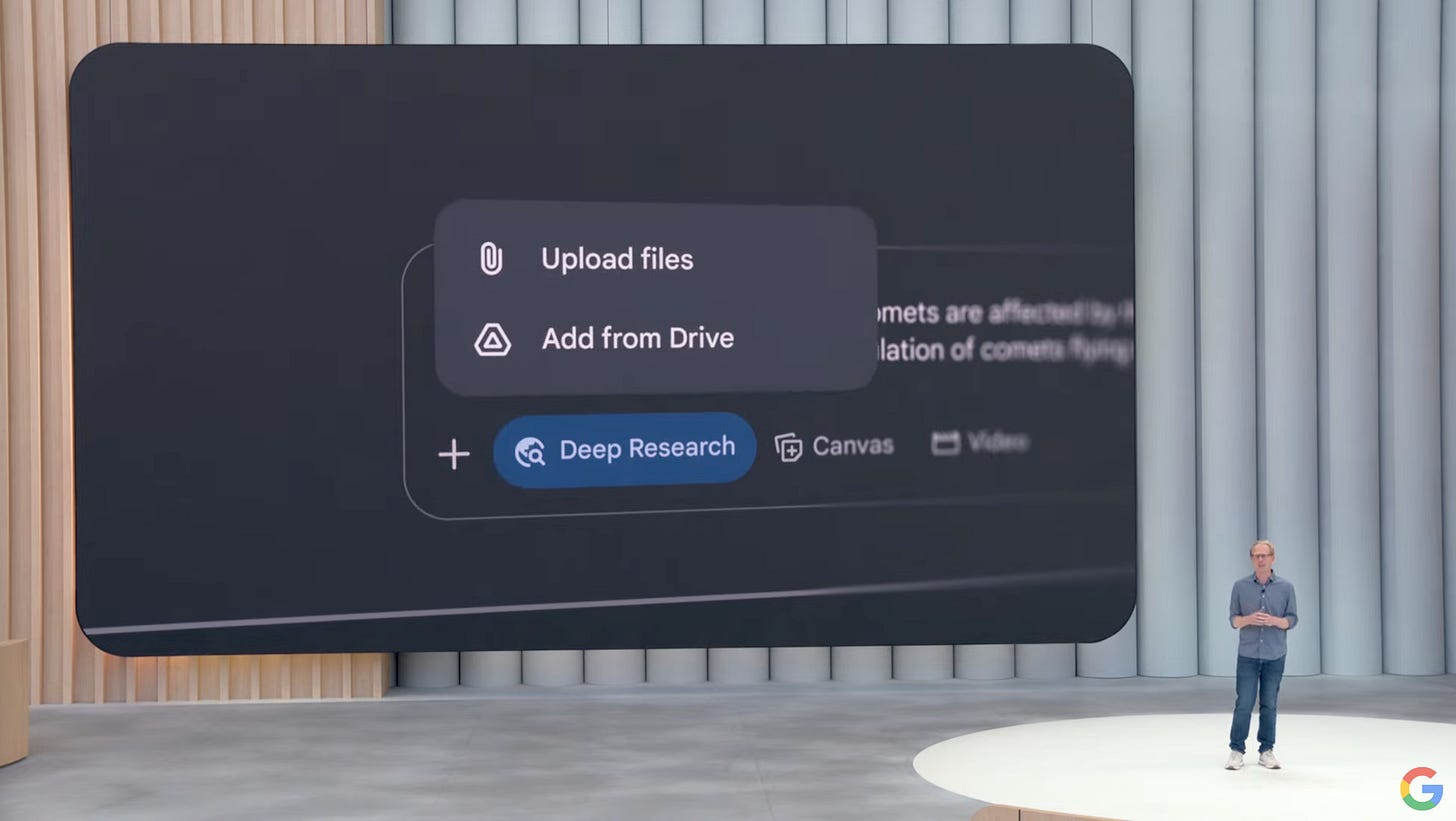

2:17 PM ET: Deep Research, which ties into AI Mode and Gemini across Google services, will let you upload your own files and store them for future reference.

2:14 PM ET: Google is going over personalized context again, how personal it can be, how it can learn things about you - I feel like I’m in Groundhog Day. I feel like they’re repeating themselves.

2:10 PM ET: A new era for Google Search.

2:08 PM ET: You can generate an AI image of you in clothes you wanna buy online using AI Mode. Seems handy.

2:06 PM ET: Google’s AI Mode for shopping seems pretty interesting.

2:03 PM ET: Google is going to roll out Search Live, which seems similar to Gemini Live but built into search and designed for more ordinary search queries. It integrates camera and microphone for a more interactive experience.

2:00 PM ET: Wow, Google Lens has over 1.5 billion users every month.

1:55 PM ET: AI Mode will learn about you across the Google services you use and let you enable Personal Context whenever you want for more personalized search results. You can disable it if you want, but there was one thing that’ll force me to keep it on when I gain access: Google says it can learn that you like outdoor seating when booking dinner reservations, which is something I enjoy doing. Therefore, it will remain on.

1:53 PM ET: Google’s new AI Mode can do a lot of stuff. It seems like the company’s answer to Perplexity and Microsoft Copilot, while also being the first indication that Google Search could be on the way out in the future.

1:50 PM ET: More praise for AI Overviews in Google Search. Google showed off its new AI Mode which takes over the entire search experience and allows for more complex queries. It’s powered by Gemini 2.5 and is rolling out to all users in the US starting today. Get ready for your grandparents to bug you about why their computer keeps saying AI.

1:44 PM ET: Me on X: “Insane how powerful AI is gonna be in the future but right now all we use it for is to summarize our notifications and write emails we're too lazy to type.”

1:42 PM ET: Google just demoed how Gemini Live and Project Astra (y’know, even MORE advanced AI), can be your assistant in helping you fix your bike, from looking for an instructions manual online to taking phone calls from repair shops to recommending screws and bolts that are in front of you by pointing your camera.

1:36 PM ET: Google wants Gemini to become a “world model” in which it interprets the world like the human brain does. It seems to be on pace to deliver this eventually.

1:34 PM ET: Yes, LOTS of Gemini talk. This is a very developer-focused keynote. Not that I/O isn’t always developer-focused, but this year especially, it seem that Google just wants the devs to work on AI so that it’s in more places.

1:31 PM ET: Google showed a demo of Gemini 2.5 in Google’s AI Studio that edited the code of a photo gallery app to add a 3D sphere effect for viewing photos, all based on a rough sketch of what they wanted the effect to do. Very impressive.

1:24 PM ET: THE COMPUTERS WHISPER TO US NOW. (Context: Google improved the voices in Gemini 2.5 to sound more natural and be able to flow between different volumes and languages.)

1:22 PM ET: Google updated its Gemini 2.5 Flash model and it’s starting to roll out.

1:19 PM ET: Gmail is getting more personalized responses. Using Gemini, Gmail will be able to generate full replies to emails based on how you sound in your previous emails. The demo on stage seemed pretty impressive. It’s rolling out this summer to subscribers.

1:17 PM ET: A new Agent Mode will come soon to the Gemini app for subscribers. I’m curious to try it out, it seems very powerful. Google showed a demo of two friends who want to find an apartment to rent, and it was able to search listings on all of their criteria and even schedule a tour on their behalf.

1:15 PM ET: Google is focusing heavily on agents for AI, which will help add new utility to Gemini and other Google products.

1:14 PM ET: Camera and microphone sharing with Gemini Live is rolling out to Android and iOS now, letting you feed the AI model with live video and ask it questions about what’s around you.

1:13 PM ET: Wait Gemini is kind of a smart ass. “Gemini is pretty good at telling you when you’re wrong,” Pichai says.

1:12 PM ET: Google is rolling out live translation for Google Meet video calls with support for English and Spanish. More languages will roll out soon, and the feature will roll out to enterprises later this year.

1:10 PM ET: Google has announced Google Beam, the consumer-facing version of Project Starline that lets you have 3D video calls using a special HP monitor. It uses six cameras and AI to make it look like you’re in the room with someone.

1:09 PM ET: Google’s generative AI search results are being used by more people than ever. Google Search is delivering generative AI to more people than any product in the world.

1:08 PM ET: Google is talking about how fast Gemini is being adopted by users. It’s on the rise, folks.

1:06 PM ET: Faster Ironwood chips are coming to Google Cloud customers later this year, helping to increase the speed of AI models.

1:05 PM ET: Sundar says Gemini beat Pokemon Playthrough. If this means something to someone here please let me know.

1:04 PM ET: Sundar Pichai has arrived, and he’s talking AI models and the speed at which Google ships them. The company wants to ship them at a “relentless pace” to keep up with rapid model progress. It does seem like every other day, Google is shipping a new Gemini model, so this adds up.

1:02 PM ET: Yeah… it’s just AI art right now. This is boring. Like, we get it - you can put tigers in the wild west.

1:01 PM ET: Starting with AI art, which makes a lot of sense given the subject matter of Google’s keynotes nowadays.

12:55 PM ET: Second cup of coffee has been brewed. Milk = stirred in. Let’s get this party started.

One of the biggest days of the year for Google is here. The company is hosting its annual I/O developer conference today, and it’s kicking things off with a live keynote address from Mountain View, California. The show kicks off at 10 a.m. PT / 1 p.m. ET, and we’re giving you live updates as they unfold.

The show is expected to last around an hour and a half to two hours and focus primarily on AI. Last week, Google hosted a special show in which it detailed some of the biggest changes coming to Android 16, likely to make room for other topics during the main I/O keynote. Those topics will likely include Gemini, other forms of AI, Android XR, and more.

I’m sitting comfortably at my desk in New York City with a cup of coffee, ready to cover all of the biggest news during the show. Keep this page loaded and refresh it once in a while for up-to-the-minute commentary on what’s being announced at I/O.

Max Buondonno is an editor at The Shortcut. He’s been reporting on the latest consumer technology since 2015, with his work featured on CNN Underscored, ZDNET, How-To Geek, XDA, TheStreet, and more. Follow him on X @LegendaryScoop and Instagram @LegendaryScoop.